Hanbit OH

오 한빛

Researcher, Ph.D. in Engineering, Japan.

I am a research scientist@AIST(国立研究開発法人産業技術総合研究所) in Odaiba, Tokyo, Japan. My research topic is robotics, manipulation and machine learning (AI robotics).

Researcher, Ph.D. in Engineering, Japan.

I am a research scientist@AIST(国立研究開発法人産業技術総合研究所) in Odaiba, Tokyo, Japan. My research topic is robotics, manipulation and machine learning (AI robotics).

Oh et al., Efficient Imitation Learning via Safe Self-augmentation with Demonstrator-annotated Precision, Under review, 2025.

Oh et al., Leveraging Demonstrator-Perceived Precision for Safe Interactive Imitation Learning of Clearance-Limited Tasks, IEEE Robotics and Automation Letters, 2024.

Oh et al., Leveraging Student's t-Regression Model for Robust In-context Imitation Learning of Robot Manipulation, IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2025.

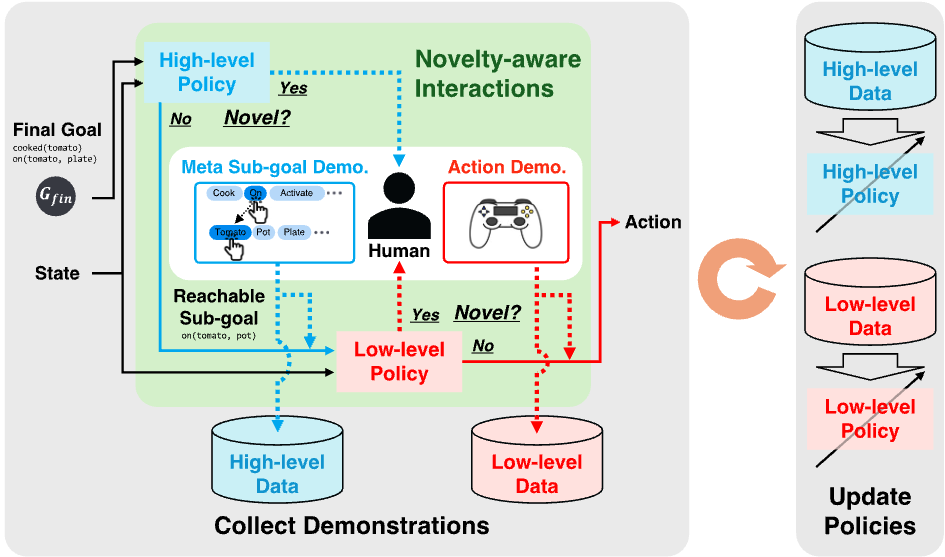

Ochoa* and Oh* et al., Interactive Sub-Goal-Planning Imitation Learning for Long-Horizon Tasks With Diverse Goals, IEEE Access, 2024. *: Equal contribution.

📚 2025-present, Research Scientist in the Embodied AI Research Team, Artificial Intelligence Research Center, National Institute of Advanced Industrial Science and Technology in Odaiba, Tokyo.

📚 2024-2025, Research Scientist in the Automation Research Team, Industrial CPS Research Center, National Institute of Advanced Industrial Science and Technology in Odaiba, Tokyo.

👨🎓 2021-2024, Ph.D. student, Division of Advanced Science and Technology, Nara Institute of Science and Technology (NAIST).

👨🎓 2019-2021, Master student, Division of Advanced Science and Technology, Nara Institute of Science and Technology (NAIST).

👨🎓 2013-2019, Bachelor student, Department of Mechanical and System Engineering, Faculty of Engineering, Okayama University.

🎖️ 2014-2015, Military service, Republic of Korea.

Download CV (PDF)